shauray singh

computers can see, hear and learn. welcome to the future!

An idiot admires complexity. A genius admires simplicity. A physicist tries to make it simple. Anything that is gaining complexity, more the idiot will admire it. If you make something more clusterfuck that he can't understand, he will think you are a god because you made it so complicated nobody can understand it.

- Terry Davis

// work

01/2024 - present

ML guy @ ModelsLab

Writing performant kernels for diffusion models. mostly migrated to cuteDSL now. Writing kernels for Blackwell these days. Did experiments with distributed training, distillation, some SRPO exps. Mostly video models now making them faster, squeezing better quality from opensource models. Building tools and launching products.

- I'm all about PyTorch, so if you're familiar with those ecosystems, we're off to a great start.

- I've got a love-hate relationship with CUDA and Triton - love the performance, hate the debugging headaches - maybe not the torch profiler

- I'm always on the lookout for ways to parallelize model inference, so if you've got experience with parallel processing, let's chat.

updates on twitter

01/2023 - 04/2023

Senior Staff @ Zummit InfoLabs

Helped design companies' ML pipelines for better and faster deployment and improved access times.

10/2022 - 01/2023

Jr. Data Scientist @ Zummit InfoLabs

I led a team of 5 interns and made some significant advancements in Demand Predictions which used some statistical methods and was then migrated to work with graph neural networks. Learned a lot about graph neural networks and how spatial-temporal graphs can make things faster and easier than some other traditional methods.

// Education

08/2020 - 10/2024

B.Tech @ Manipal University Jaipur

Major in Data Science, minor in Finance. This is where I got really deep into deep learning by browsing the internet and attending random lectures on youtube in my dorm room.

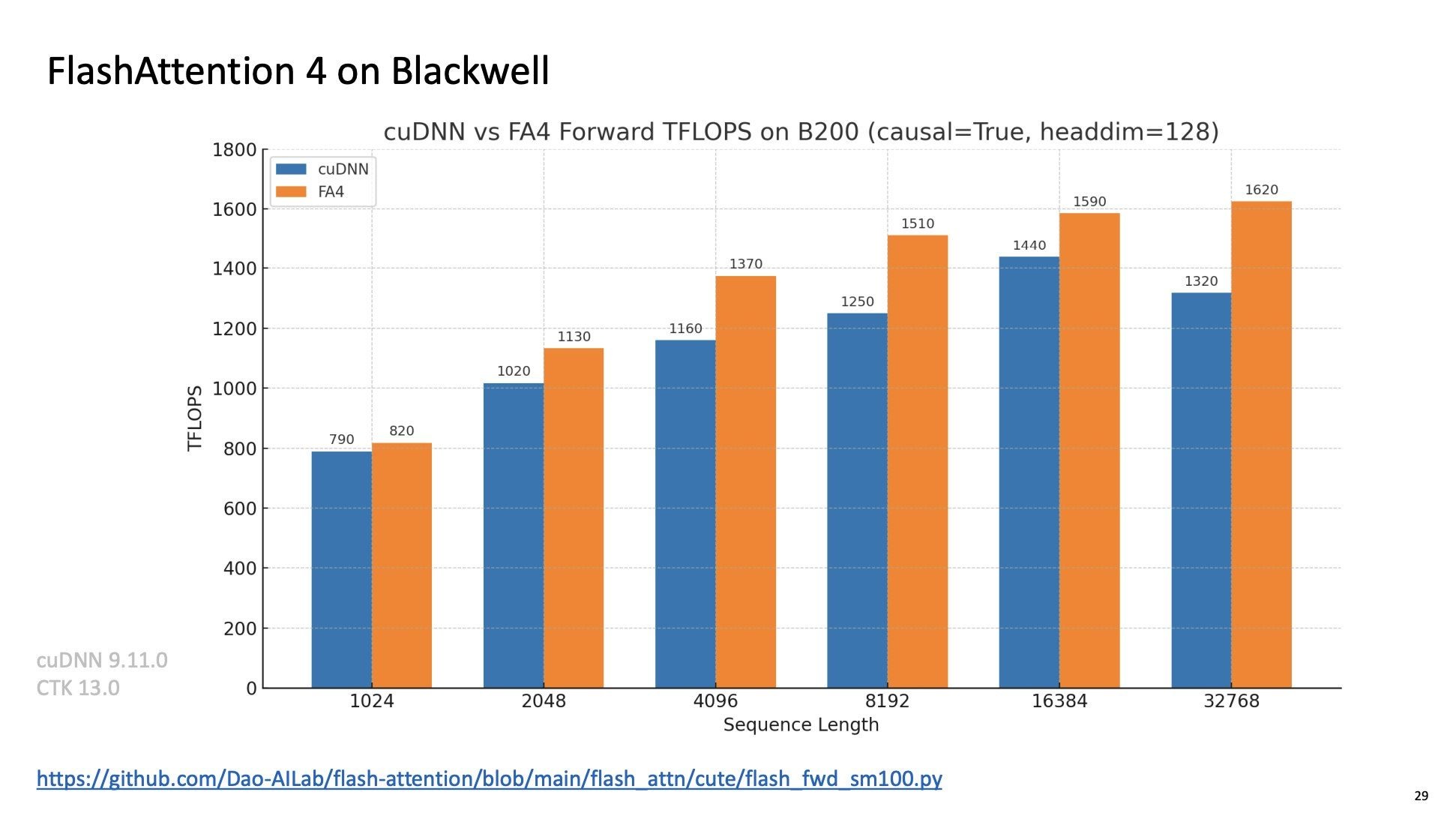

// blackwell optims for wan2.2

this is what i'm working in my free time right now, mostly gathering up free lunches for optims and some cutedsl kernels, currently adopting tri dao's fa4 for wan2.2 since i won't need any causal masking or kv cache optimizations i'm striping it down to barebones and comparing what %sol i can achive with the knowledge i have. also i post a lot of profiles related to these opims on x.

// open source

I have a deep deep love for OpenSource. It's like lifeblood of the tech world, and I'm all in! I was a regular contributor over at Hugging Face, rolling up my sleeves and getting things done. From Transformers to tokenizers, I'm in the trenches, making things better. Sharing some of the wisdom with blogs and some low quality tweets! So, remember, open source is where it's at.

// project graveyard

GRAT-X for FLUX - Un-official implementation of GRAT-X for FLUX.1-dev. It’s a criss-cross attention mechanism that should be fast atleast on huge images.

Film Grain - some post-processing nodes for video's and image's, a simple repo transfering comfy nodes for post-processing to python functions.

Astra Video - Contains random experiments involving video models mainly an intrpolation experiment with IP-Adapters to generate a raw 16fps video. And some more multiframe interpolation stuff with LTX-Video family of models.

Distil V0.1 - Contains random experiments involving flow and diffusion based models most notabily has codes for noise injection, DPM adapted to flow matching and a bunch more.

Regional Prompting SD3.5 - Ported SD3.5 to work with Training-free Regional Prompting for Diffusion Transformers. Results aren't that crazy but the ideas was pretty cool atleast at that time.

Turing - was supposed to be just a simple library built on top of numpy which would calculate gradients for your neural network with some additional features that would help in the development BUT since then my vision for Turing got a little bigger and now Turing has a C backend for tensor support with custom matmul accelerators, AVX512 for multi-core CPU's and now supports CUDA backend. Basically, this is what PyTorch is but way way way more complex, I wanted something not so complex but powerful enough!

OnlyGans - is currently under development and is NSFW. OnlyGans uses a fleet of SOTA generative models to generate naked individuals which would work something like thispersondoesnotexist.com. uses some version of StyleGan to generate images and may support videos in the future.

Support this noble cause by contributing to the repo and make this world a better place to live!

Trachea - transforms your speech to a text output. It uses a simple convolution model on mel spectrograms generated by a custom library which when presented with an audio sample would create a mel spectrogram and then the pixel data would get squished and smashed by the neural network to generate a text representation of that particular sample! It was a simple project for my deep learning class that I took in college.

Context Tree Weighting - was again a college project in which I tried implementing different compression algorithms as a baseline and then tried implementing Context Tree Weighting which was not very easy and I would like to get back to it someday maybe I can have a better understanding of how compression works!!

Calib-Challenge - was a challenge organized by Comma AI. The repo contains the code for predicting the YAW and Pitch of a moving car from the dashcam video. The project kept on going after the contest as well and now it contains some very basic code for a self-driving car. Just the software part of it, there are no bound conditions yet and it just gives out raw data that has to be processed before giving it to the car's computer. Pedal is the Operating System for the same and is UNIXish. I wanna drive a fully autonomous car with this code someday (with some improvement of course!)

Quadruple Inverted Pendulum - was my first attempt at writing a research paper that failed (OF COURSE) it was pretty straight forward actually. It's basically 4 pendulums attached to one another and the task was to balance them on top of the prev pendulum without falling. But turns out calculating Lagrangian equations for this setup is pretty straightforward but has a lot of calculations and just to simulate the pendulums there are hundreds of thousands of calculations every sec. But then I found something very useful called GameGAN but again there was no open source code for that and I DROPPED THE IDEA!

misc - I built a lot of other random stuff over time. GO check it on my GitHub and if you wanna make a change in the world and as George Hotz says "wanna win over nature" find a project on GitHub or maybe I can help you find one visit

https://github.com/shauray8 and fork some repositories and start adding stuff!

// misc unsorted brain dump

- Who wants to work on "Error correction for cells to not get cancerous" message me on Twitter!!

- I can't really find some good articles on The sigmoid curve of innovation maybe I'll write a blog on it!!

- Some of my tweets on deep learning

- A while back I got interested in Phage Mutation (I don't know the reason). Here's something that'll get you started

- Finding Beauty in Bad Algorithms

- We can arrange some kind of discussion on the hard problem of consciousness!! I think that's a fairly misunderstood topic. message me on Twitter and maybe we can do something

- I've learned a lot from George Hotz and his coding streams!! go have a look!

- Loss function Tumblr Andrej Karpathy's collection of funny loss functions.

- I first got interested in the hardware and was not a software person at first. My first Hardware interaction was when I was 8 or 9 maybe that is when I started assembling computers. and that kinda stayed with me and I still have that love for hardware.

we must know, we will know

stem stuff. requires attention span.

Refer Twitter for microblogs, this is for full length technical deep dives. Writing on STEM related topics and experimenting with the Feynman technique.